Projects

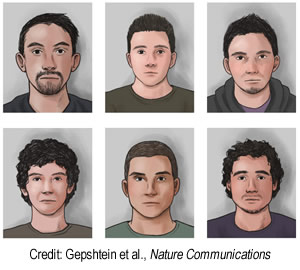

Perceptual scaling for eyewitness identification

Albright (Salk), Gepshtein (Salk) | Eyewitness misidentification accounts for 70% of verified erroneous convictions. To address this alarming phenomenon, previous research has focused on factors that influence likelihood of correct identification, such as the manner in which a police lineup is conducted. Traditional lineups rely on overt eyewitness responses that confound two covert factors: strength of recognition memory and the criterion for deciding what memory strength is sufficient for identification. We have developed a new lineup that permits estimation of memory strength independent of decision criterion. Our approach employs powerful techniques developed in studies of perception and memory: perceptual scaling and signal detection analysis. Using these tools, we scale memory strengths elicited by lineup faces, and then employ optimal classifiers whose task is to distinguishing perpetrator from innocent suspect using scaling data. These methods allow one to reveals structure of memory inaccessible using traditional lineups, to render accurate identifications uninfluenced by decision bias, to evaluate performance of individual witnesses, and to calibrate libraries of face images in order to create fair lineups.

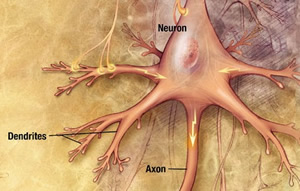

Neural mechanisms of adaptation in visual systems

Albright (Salk), Gepshtein (Salk) | One of the fundamental tenets of sensory biology is that sensory systems adapt to environmental change. It has been argued that adaptation should have the effect of optimizing sensitivity to the new environment. We make this premise concrete using a normative theory of visual motion perception which makes predictions about visual spatiotemporal sensitivity as a function of environmental statistics. Adaptive optimization should be manifested as a change in spatiotemporal sensitivity for an observer and for the underlying motion-sensitive neurons. We test these predictions by measuring effects of adaptation on visual sensitivity in the context of the neuronal representation of speed of visual motion. The long-term goal of this project is to contribute to the understanding of biological substrates of visual perception. Detailed knowledge of normal functions of visual cortex shall provide insights into neural events that underlie visual sensitivity and the effects of visual experience, which will ultimately aid the treatment and prevention of neurologic and neuropsychiatric disorders of vision. These goals are pertinent to development and use of prosthetic and behavioral therapies for the visually handicapped.

Object recognition in natural dynamic environments

Sharpee (Salk) | Understanding neural mechanisms of object recognition is a major unsolved problem in sensory neuroscience that has broad implications for the design of artificial recognition systems, including sensory prostheses. These neural mechanisms are difficult to measure for three reasons. First, neurons that represent objects do not respond to the simple visual patterns, such as white noise. Second, these neurons respond to “natural stimuli” (signals derived from the natural sensory environment) that have complicated statistical properties which make data analysis diffcult. Third, neurons that represent objects often do so based on conjunctions of multiple features and in a manner that is invariant to changes in the position, illumination and scale of the preferred object. To address these difficulties, our group develops statistical methods that can be used with natural stimuli and can characterize response properties of neurons with difference types of invariance and selective to conjunctions of multiple image features. We apply these statistical methods to analyze responses of neurons involved in object recognition and recorded while animals viewing dynamics scenes of natural environments.

Distributed information processing in sensory systems

Navlakha (Salk) | Biological systems solve information processing problems that are similar to the problems faced by human-engineered systems, including coordinated decision making, routing and navigation. In biological systems, information is typically processed by networks – often without central control – using nontrivial internal structures (topologies). At the microscopic level, for example, interactions between the molecules inside the cell divide into subnetworks. The subnetworks internal to the system are less exposed to the environmental noise. They are more connected and less robust than the external subnetworks, which use sparser topologies to isolate the spread of propagating noise. Similar principles are found in macroscopic biological systems, such as the neuronal network of the free-living nematode worm C. elegans. We are developing simple evolutionary models that can reproduce these topologies based on feedback from the environment. We are then applying these observations to design communication networks (wireless networks and networks of sensors) optimized for noisy or malicious environments. More information.

Vision science for dynamic architecture

Gepshtein (Salk), Lynn (UCLA), McDowell (USC) | The relationship between the person and the built environment is dynamic. This dynamism unfolds over many spatial and temporal scales. Consider the varying viewing distances and angles of observation, and also the built environments that contain moving parts and moving pictures. The architect wants to predict human responses for the full range of these possibilities: a daunting task. We study how this challenge can be reduced using the systematic understanding of perception by sensory neuroscience. Our starting point is the basic fact that human vision is selective. It is exceedingly sensitive to some forms of spatial and temporal information, but is blind to others. A comprehensive map of this selectivity has been worked out in the tightly controlled laboratory studies of visual perception, where the subject responds to stimuli on a flat screen at a fixed viewing distance. We translate this map from restricted laboratory conditions to the scale of large built environments. Using a pair of industrial robots carrying a projector and a large screen, we created the conditions for probing the limits of visual perception on the scale relevant to architectural design. The large dynamic images propelled through space allowed us to trace boundaries of the solid regions in which different kinds of visual information could or could not be accessed. For this initial study, we concentrated on several paradoxical cases, such as the diminished ability to pick visual information as its source approaches the observer, and the abrupt change in visibility following only a slight change in the viewing distance.

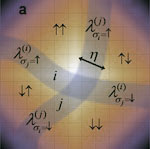

Adaptive perception of motion in natural environments

Sharpee (Salk), Gepshtein (Salk) | Nervous systems implement the wide range of sensory behaviors using limited neural resources. This feat is made possible by the remarkable flexibility of neural systems. They rapidly allocate resources to the imminently important features of the environment: prompted by the organism’s variable goals and the variable environment. One of the well-known manifestations of this flexibility is sensory adaptation: a change in sensory performance caused by environmental change. Sensory adaptation has been extensively studied in visual systems using artificial optical stimuli, producing paradoxical results. Recent theoretical and experimental studies suggested that the inconsistencies could arise because effects of adaptation were sampled narrowly, using restricted sets of stimuli, and also because changes in the environment were simulated in a manner grossly dissimilar to the changes that normally occur in the natural environment. We overcome these weaknesses by measuring effects of adaptation broadly, using optical patterns that approximate natural stimuli, and by varying stimuli in a manner that mimics the variability of the natural world. We consider different theoretical approaches (such as theories of optimal coding and theories of efficient allocation) and test their predictions using novel psychophysical methods that allow us to rapidly assay large-scale characteristics of sensory performance and their changes.

Towards optimal sensory ecology of built environment

Gepshtein (Salk), Panda (Salk), Fisher (USC), McDowell (USC) | Biomedical Science has developed refined metrics of human health and disease and identified a multitude of internal and external factors that contribute to health and well-being. Translation of this research has been largely mediated by pharmaceutical medicine, disregarding other important channels of translation. One of the key non-pharmaceutical channels is sensory ecology. Today, humans spend more than 87% of their lives indoors, where sensory stimulation is dominated by artificial sources, such as light fixtures, visual displays and loudspeakers. This environment is desynchronized from the natural day-night cycle of the ambient light and it presents us with the spatiotemporal patterns of structured light that differ from the patterns in which we evolved. Recent basic research of the biological mechanisms of human sensory and perceptual abilities, and their dependence on circadian rhythms, carries tremendous potential for improving the design of built environments toward optimum health and performance. We investigate circadian variability of human sensory performance in a fully-controlled indoor sensory ecology simulating working environments of individuals exposed to multiple sources of visual information under a wide range of lighting conditions and information load. The visual information is presented in several sighting formats: (a) using desktop displays positioned as it is common in office settings; (b) in a partially immersive environment, where users are surrounded by large displays or wear a transparent head-mounted display that overlays digital information on a physical background (augmented reality); and (c) in a fully immersive environment, where an nontransparent head-mounted display fills the field of view.

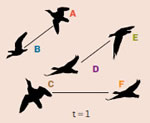

Prospective optimization with limited resources

Snider (UCSD), Lee (UCSD), Poizner (UCSD), Gepshtein (Salk) | Our future is uncertain because some future events are unpredictable, and also because our ability to foresee consequences of our own actions is limited. We study how humans select actions under such uncertainty, in view of the exponentially expanding number of consequences on a branching multivalued dynamic visual stimulus. By comparing human behavior with behavior of ideal actors, we identify the strategies used by humans in terms of how far into the future they look (their “depth of computation”) and how often they attempt to incorporate new information about the future. In contrast to previous evidence that humans tend to reduce the computational difficulty of decision-making by means of simplifying heuristics, we find that actors can perform an exhaustive computation of all possible future scenarios within a horizon limited by a fixed number of computations: a limited resource. Under increasing time pressure, the actors do not resort to heuristics; they either reduce the computational horizon or recalculate the expected rewards less frequently.

Optimal decision making in dynamic environments

Sharpee (Salk), Chalasani (Salk) | Evolution has exerted strong pressure to optimize the speed and accuracy of decisions made by animals in dynamic environments using only partial sensory information. Even such small animals as the nematode worm C. elegans (with a nervous system of just 302 neurons) can optimally responds to changing environmental conditions over the time scales that are substantial compared to their lifespan. In contrast, studies of human decision making often find inefficiencies, albeit on different time scales. We combine theoretical and experimental methods to probe how small neural circuits can implement decision making in rich dynamic environments. Our recent results indicate that circuits composed of just a few neurons can implement maximally informative foraging strategies that allow worms to search for food over areas thousand times larger than their body size (Calhoun, Chalasani, & Sharpee, eLife 2014). Using large-scale simulations, now we study how combining the small circuits as modules within a larger network can improve the robustness and diversity of decisions made on different time scales.

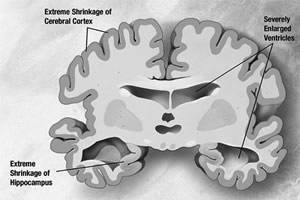

Automated individual displays for persons with dementia

Albright (Salk), Gepshtein (Salk), Zeisel (Hearthstone Alzheimer Care) | An estimated 5.4 million Americans are currently suffering from Alzheimer’s disease. Persons with dementia have significant difficulties with spatial orientation and wayfinding. Disorientation and wayfinding difficulties negatively affect the quality of life of persons with dementia and can lead to responsive behaviors, such as agitation, aimless wandering, apathy, anxiety, and repetitive questioning. We developed a measurement platform for rapid evaluation of the individual visual abilities of persons with dementia and use results of these measurements to control visual displays that are automatically and dynamically adjust themselves in view of the distance to the person according to his or her individual visual abilities.

A lightweight platform for visual psychophysics

Jurica (RIKEN Brain Science Institute), Gepshtein (Salk) | Modular Psychophysics (mPsy) is a compact but versatile framework for computer-aided visual psychophysics. It is written in Python, but it can be controlled using other programming languages, such as MATLAB and R. The framework consists of two parts: a presentation module and a communication module. The former renders stimuli; the latter is an interface for local or remote control of the stimulus and procedure. The communication module mediates between the core of mPsy and other programming languages. mPsy differs from other psychophysical software in three ways. It requires a small amount of code, it is self-contained, and it is open to control over networks. The platform is designed for exporting experiments from the traditional laboratory environment to “research in the field” and it is being tested and developed in several projects at the Collaboratory including Automated individual displays for persons with dementia and Vision science for dynamic architecture.